Understand Models.

Build Responsibly.

A toolkit to help understand models and enable responsible machine learning

A toolkit to help understand models and enable responsible machine learning

State-of-the-art techniques to explain model behavior

Comprehensive support for multiple types of models and algorithms, during training and inferencing

Community driven open source toolkit

Model interpretability helps developers, data scientists and business stakeholders in the organization gain a comprehensive understanding of their machine learning models. It can also be used to debug models, explain predictions and enable auditing to meet compliance with regulatory requirements.

Access state-of-the-art interpretability techniques through an open unified API set and rich visualizations.

Understand models using a wide range of explainers and techniques using interactive visuals. Choose your algorithm and easily experiment with combinations of algorithms.

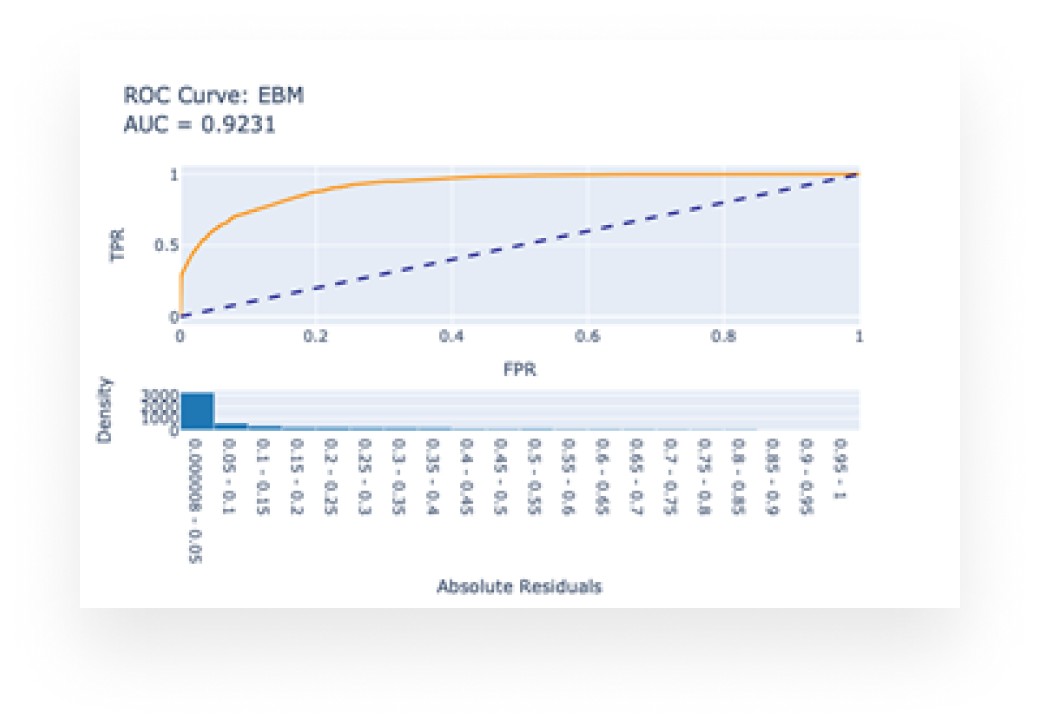

Explore model attributes such as performance, global and local features and compare multiple models simultaneously. Run what-if analysis as you manipulate data and view the impact on the model.

Glass-box models are interpretable due to their structure. Examples include: Explainable Boosting Machines (EBM), Linear models, and decision trees.

Glass-box models produce lossless explanations and are editable by domain experts.

Black-box models are challenging to understand, for example deep neural networks. Black-box explainers can analyze the relationship between input features and output predictions to interpret models. Examples include LIME and SHAP.

Explore overall model behavior and find top features affecting model predictions using global feature importance

Explain an individual prediction and find features contributing to it using local feature importance

Explain a subset of predictions using group feature importance

See how changes to input features impact predictions with techniques like what-if analysis

Understand models, debug or uncover issues and explain your model to other stakeholders.

Validate a model before it is deployed and audit it post-deployment.

Understand how models behave, in order to provide transparency about predictions to customers.

Easily integrate with new interpretability techniques and compare against other algorithms.

We encourage you to join the effort and contribute feedback, algorithms, ideas and more, so we can evolve the toolkit together!

Contribute